Daniele Lezzi, Senior Researcher, Barcelona Supercomputing Center

and Francesc Lordan, Researcher, Barcelona Supercomputing Center

The fog-to-cloud paradigm has recently gained popularity to address requirements of collecting and computing data on IoT scenarios where the information is processed already at the producing side (Edge), and the Cloud is leveraged for batch processing of the aggregated data coming from the devices.

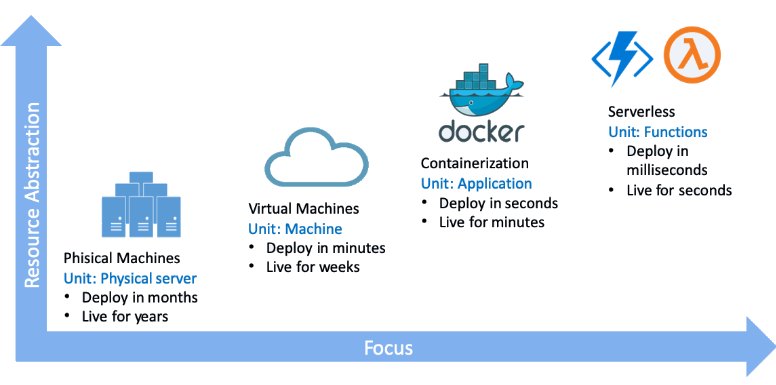

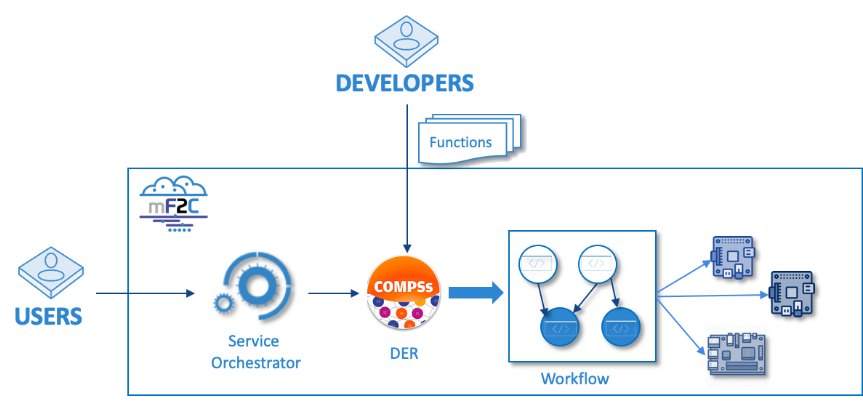

In a previous post we have described the Distributed Executed Runtime of the mF2C platform, based on the COMPSs framework, that transparently distributes and executes the different parts of applications in F2C environments. Such applications are characterized by computing patterns as sense-process-actuate, where an event coming from a sensor triggers an execution, stream processing where the data is constantly produced and analyzed to generate other data, and batch computing to analyze larger amounts of data, using for example machine learning techniques, to periodically update a specific training model. All these scenarios can benefit from a Function-as-a-Service execution model developing applications as a set of functions running in a stateless and serverless mode when a specific event is triggered.

The DER framework can easily be adopted for the development of Fog applications targeting the three patterns of computation described above. Given that Fog nodes can join in and leave the infrastructures in an unpredictable manner, this solution builds on autonomous agents able to execute functions in a serverless, stateless manner. At execution time, the agent converts the function into a task-based workflow whose inner tasks correspond to other functions that, in its turn, will be executed on the local computing devices or offloaded to an associated agent.

Benefits of the FaaS Task based approach

From the point of view of the developer this approach makes it much lighter the process of designing the application since he/she has just to focus on the program logic, following the task approach, without worrying about the parallelization and the distribute deployment of the code.

On the other side, the users are allowed to access very large infrastructures as clouds and, at the same time, they benefit from the low latency of edge computing using the same API for the execution of the applications.