Technical University of Braunschweig

With the new emerging hybrid computing systems, that integrate traditional cloud computing with the edge/fog computing paradigms, came the new challenges. Whereas in traditional cloud computing resources are centralized and static, in joint fog to cloud environments, the heterogeneity and dynamicity of devices make QoS provisioning an open challenge. Researchers have focused on developing novel technologies, mechanisms, and methods that can be used to optimize QoS provisioning in diverse and heterogeneous network environments. Due to the complexity of the problem, special emphasis has recently been put on the use of machine learning approaches.

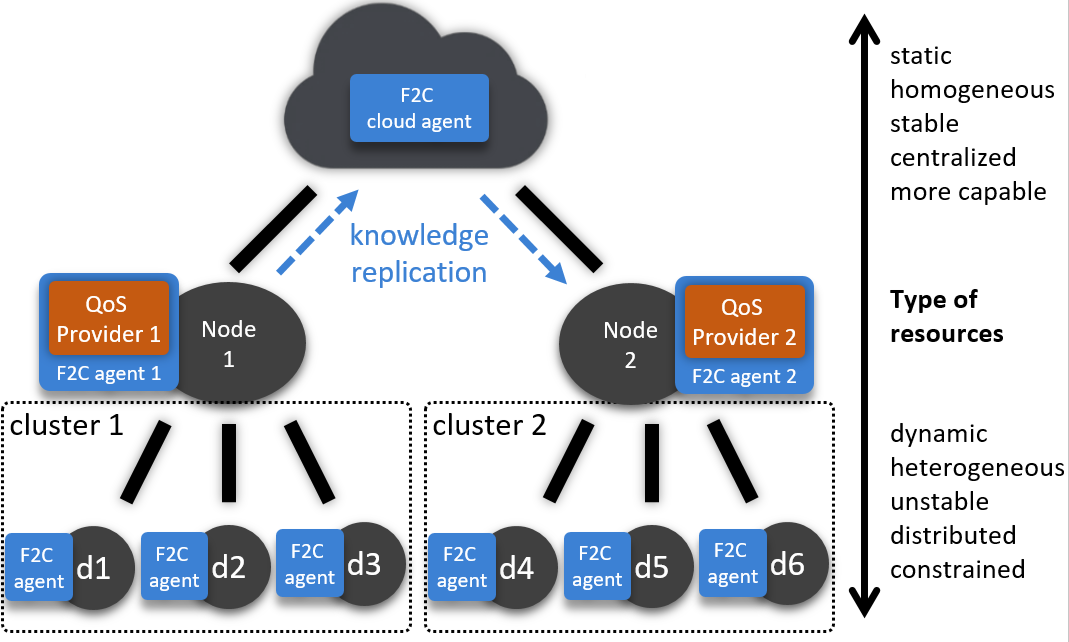

One of those solutions is a QoS provider mechanism based on reinforcement learning developed as an integral component of the mF2C system solution leaning on the fog-to-cloud architectures proposed in Open Fog Consortium standardization body. The general network architecture is hierarchical and includes several layers, as shown in Fig 1. The bottom layer consists of constrained devices representing the fog, layers in the middle include more computationally capable devices while the top layer represents the cloud. The network manager has various components providing the best experience to the user. QoS provider is an important module to ensure that the SLA agreements are fulfilled by blocking or allowing the usage of devices based on their availability. QoS provider makes use of telemetry data that determines which agents failed in the past executions. Learning from previous executions implies the usage of learning techniques to enhance the decision making mechanism of the QoS provider. It aims to ensure the best resource allocation and assignment by avoiding the assignment of devices that are expected to fail.

Figure 1. Fog to Cloud architecture [1]

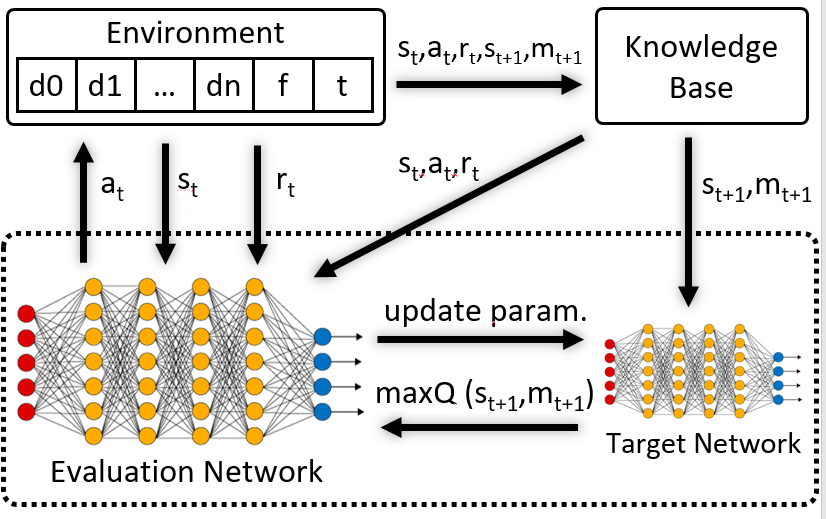

Reinforcement Learning (RL) is a machine learning approach that consider a decision system acting in a dynamic environment and maximizing a predefined cumulative reward. In our case, RL is the natural ML method that can be applied regarding the dynamic of the network environment (i.e actions need to be taken in a real time fashion) and stochastic failure behaviour (i.e luck of an exact analytical model). Fig. 2 represents a model design. The environment consists of an array of booleans (d1, d2, …,dn), each one representing a device in a cluster, plus an specific boolean f, used to indicate that the service execution failed, plus an integer t to determine the time step. Each boolean represents whether a specific device dx is blocked for allocation (value 1) or allowed (value 0). From this environment, which will be modified into a new state on every iteration, a new input is generated for the evaluation network. Because in every iteration t, a new state st from the environment is generated, a new reward rt is calculated based on the previous action and the weights of the network updated. Work published in [1] provides details about the mechanism of RL approach, a simplified example and interesting results, giving a real successful application of integrating the intelligence with QoS providing mechanism in a fog to cloud system.

Figure 2. Deep Reinforcement Learning model [1]

[1] F. Carpio, A. Jukan, R. Sosa and A. J. Ferrer, “Engineering a QoS Provider Mechanism for Edge Computing with Deep Reinforcement Learning” accepted for publication at 2019 IEEE Global Communications Conference (GLOBECOM), Waikoloa, USA, 2019